Titled “An On-device Deep Neural Network for Face Detection”, the latest blog post goes to great lengths to detail resource-intensive processes of recognizing faces in your photographs by leveraging the power of Apple’s custom-built CPUs and GPUs.

Apple acknowledges that its strong commitment to user privacy prevents it from using the power of the cloud for computer vision computations. Besides, every photo and video sent to iCloud Photo Library is encrypted on your device before being sent to iCloud and can only be decrypted by devices that are registered with the iCloud account.

Some of the challenges they faced in getting deep learning algorithms to run on iPhone:

The deep-learning models need to be shipped as part of the operating system, taking up valuable NAND storage space. They also need to be loaded into RAM and require significant computational time on the GPU and/or CPU. Unlike cloud-based services, whose resources can be dedicated solely to a vision problem, on-device computation must take place while sharing these system resources with other running applications.

Most importantly, the computation must be efficient enough to process a large Photos library in a reasonably short amount of time, but without significant power usage or thermal increase.

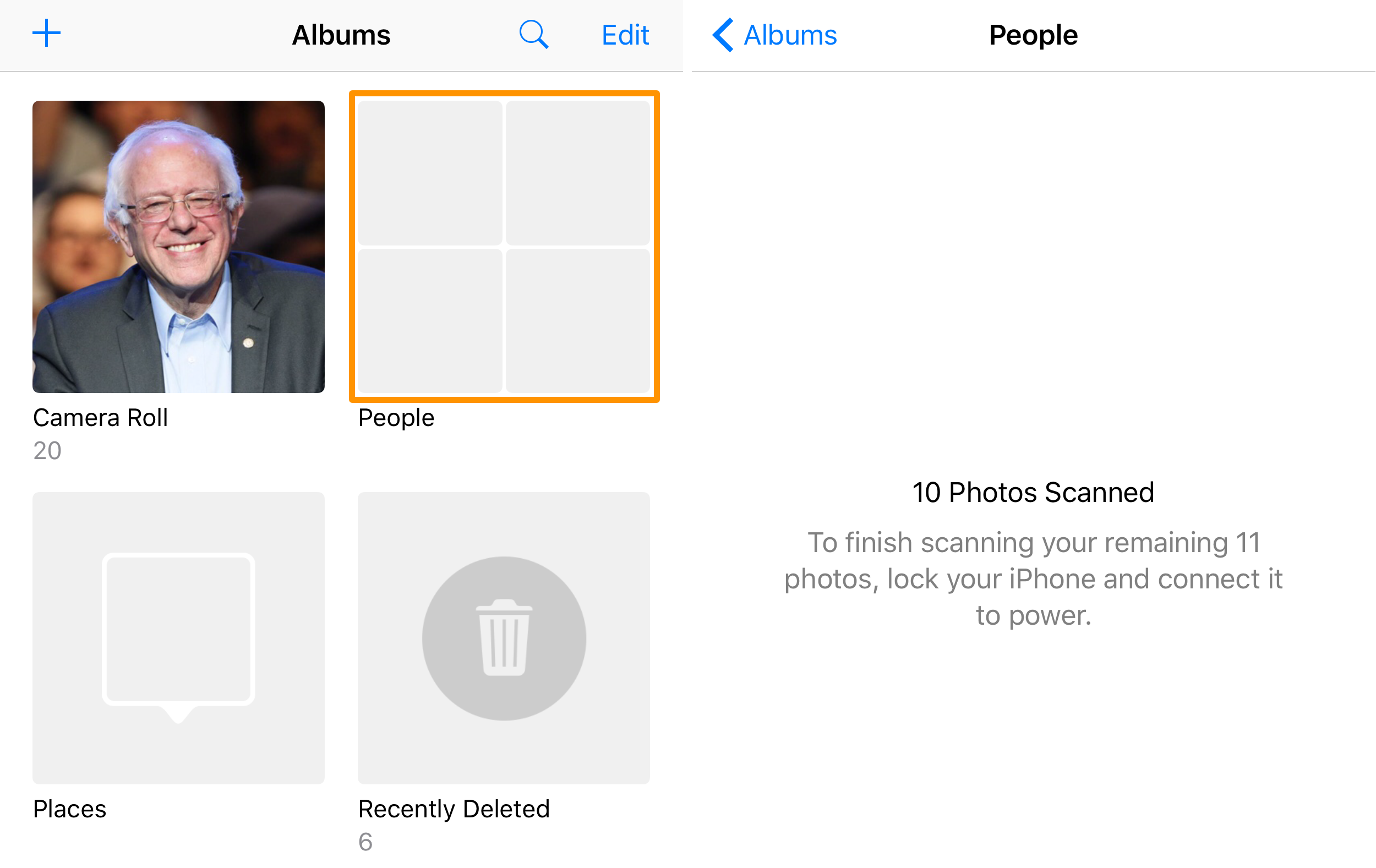

To overcome those challenges, Apple uses BNNS and Metal to unlock and fully leverage the power of its in-house designed GPUs and CPUs that are built into iOS devices. You can actually feel this on-device facial detection at work after upgrading to a major new iOS version.

This usually prompts iOS to re-scan your whole Photos library and run the facial detection algorithm on all photos from scratch, which can cause the device to overheat or become slow until Photos has finished scanning your library.

Apple started using deep learning for face detection in iOS 10.

With the release of the new Vision framework in iOS 11, developers can now use this technology and many other computer vision algorithms in their apps.

Apple notes that it faced “significant challenges” in developing the Vision framework to preserve user privacy and allow the framework to run efficiently on-device.